Data quality tools focus on validation, profiling, and rule-based checks to ensure data meets defined standards. Observability tools focus on monitoring data pipelines and detecting anomalies in real time. Many modern platforms combine both approaches.

Data quality used to be handled by spreadsheets and a lot of late-night firefighting. Today it’s a discipline in its own right: tooling, processes, people, and – increasingly – automated detection and observability. In this piece, we’ll walk you through what to look for in a data quality tool, what tools are out there, and how they integrate into different data ecosystems.

What to look for in a data quality tool: key capabilities

Data quality is not a finite discipline. Yes, it has its own data quality metrics, but it also intertwines with many other aspects of data management and governance. And so, data quality tools are also rarely standalone software pieces. They need to fit with other data management tools like a missing puzzle piece (which they so often are).

And finding the right fit is somewhat like hiring a new team member. Whether they fit the job or not depends on skills (or features), profile, and how they’ll work with the rest of your organization. (You see the resemblance.) So, you should vet them against all of the above.

This article is specifically about available tools, so we won’t go into too much detail about the vetting process here. But below are the general capabilities to look for first. For a step-by-step guide on selecting an enterprise data quality tool, including building a business case, creating a vendor scorecard, and running a compelling proof of concept, go to our recent article: How to choose a data quality platform.

But, back to the key capabilities of a data quality platform, based on common data quality best practices:

- Clear visibility into your data

A good tool should help you quickly understand what data you have, where it comes from, and how it’s being used. That means easy-to-read data quality dashboards and data profiling that shows you patterns, outliers, and gaps without needing a data science degree. Many data quality tools already do a good job here. - Automation that saves time (and sanity)

Data teams shouldn’t have to spend hours checking whether yesterday’s numbers look right. Modern tools use automation, and sometimes AI, to constantly monitor data health, detect issues early, and reduce manual effort. The results are fewer surprises and more time spent on strategy rather than just firefighting. - Business rules made simple

Every business has its own definition of “good data.” A strong data quality tool lets business users define these rules in plain language and automatically checks whether the data follows them. - The right alerts, to the right people

Look for tools that can prioritize alerts based on business impact and route them to the right teams, whether that’s marketing, finance, or IT. - Context for faster fixes

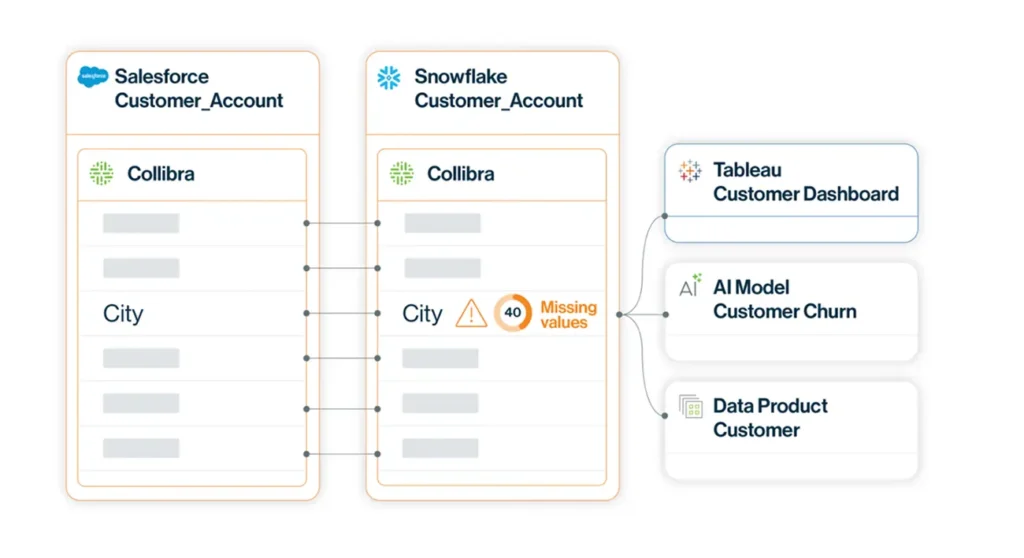

Tools with built-in data lineage show where the data came from and where it goes, making it easier to trace issues back to the source and fix them – before they affect reports, dashboards, or customer experiences. - Integration with your existing ecosystem

Your data lives in many places – warehouses, lakes, cloud platforms, spreadsheets, BI tools, and other SaaS apps. A good tool should connect to all of them, seamlessly, without forcing you to entirely rebuild how you work. - Governance and accountability

Data quality is as much about the technology as it is about people and processes. Look for tools that provide a transparent insight into data ownership, policies, and issue resolution. - Scalability as you grow

When data volumes are only going up, you need to choose a solution that grows with you. One that can handle more data, more sources, and more users without becoming unmanageable or cost-prohibitive in the process. (Hint: we know how to optimize to stay efficient and avoid overpaying for tools and storage as your data estate grows exponentially). - Insights that drive action

The goal of data quality initiatives is to ultimately improve the data based on the errors you spot. And, by extension, to improve the decisions that rely on the data. Tools that highlight trends, recurring problems, and business impact can help you prioritize fixes that really move the needle for the business.

If a product checks most of these boxes and fits your team’s maturity, you’ve got a winner. And many modern enterprise platforms are trying to bake several of these capabilities into a single suite.

At Murdio, we most often work with Collibra’s Data Quality & Observability – and it stands out because it connects data quality to governance, ownership, and business policies, rather than treating it as an isolated IT concern (which it definitely isn’t).

In the following recommendations, we’ll talk more about Collibra (and we break it down even more in this article: Collibra Data Quality and Observability: What Is It, and Why Should You Care?). But we will also point you to some other data quality tools out there that have caught our attention.

Note that the way we divided the tools in this article, some will belong to several categories (specifically, the more complex platforms like Collibra that are suites of tools built to seamlessly interact with each other).

Enterprise platforms for end-to-end data quality management

At enterprise scale, data quality is rarely a single tool story. You want an integrated platform that covers discovery, policy, quality enforcement, and the human processes that make data reliable.

The three most common platform archetypes are:

- Governance-first platforms with embedded data quality (like Collibra) that put governance and the business glossary at the center, then layer data quality and observability on top. The advantage is tight coupling between policies, owners, and data quality checks. Collibra’s approach, for example, combines rule management, lineage, and automated monitoring with governance workflows to align technical checks to business policies.

- Observability-first platforms that later add governance, like Monte Carlo, that started with strong anomaly detection, lineage, and incident management. Many are expanding into governance and cataloging integrations so alerts become part of a governance loop.

- Integration-focused suites and traditional data management vendors that offer mature data quality modules as part of broader suites of tools. These are great if you already use those ecosystems, but they’re often more prescriptive and can be heavier to deploy.

Let’s start with some examples of end-to-end enterprise data quality platforms.

Collibra Data Quality & Observability

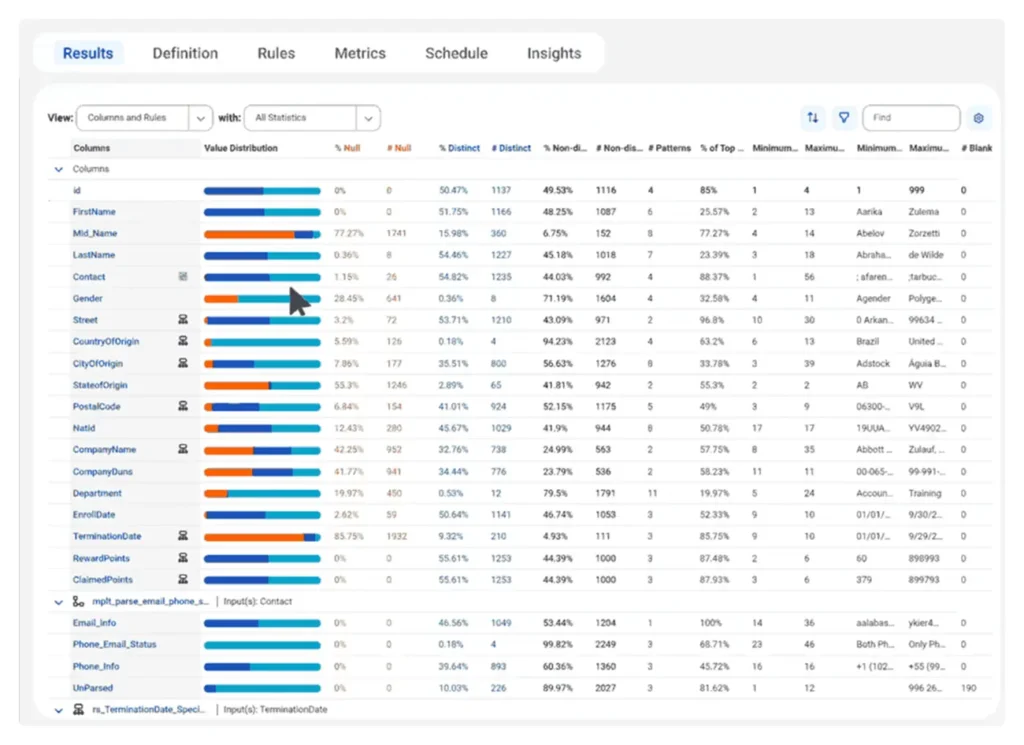

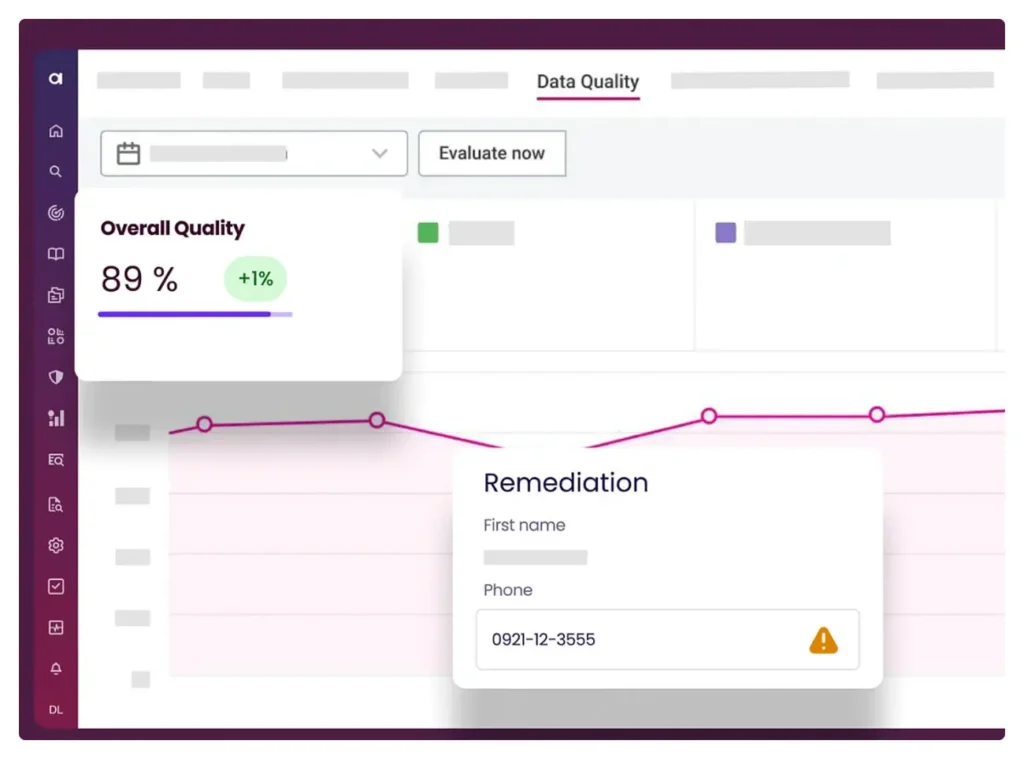

The Collibra Data Quality and Observability platform integrates data quality directly into Collibra’s data governance framework. It uses machine learning to detect anomalies, map lineage, and link data issues to business policies and owners – turning data quality management into a collaborative, cross-team effort.

Key features:

- Automated data profiling and cleansing

- Quality scoring and data quality certification

- AI-powered data quality rule creation

- Proactive anomaly detection, real-time alerts, and reporting

- Data quality ownership and data quality issue management workflows

- Easy integration with Collibra’s centralized data catalog, data governance, and data lineage

And that last point is why Collibra’s data quality is the natural progression if you already use Collibra for data management. The tools work seamlessly together and are an easy and powerful expansion of Collibra Data Catalog capabilities.

For specific examples, read some of our case studies focused on data quality in Collibra:

→ How an energy giant transformed its Collibra implementation with a Technical Product Owner

→ Management and cataloging sensitive critical data elements in a Swiss bank

→ Collibra Implementation Team for an International Retail Chain

→ Custom Collibra SAP Lineage Implementation

Informatica Data Quality

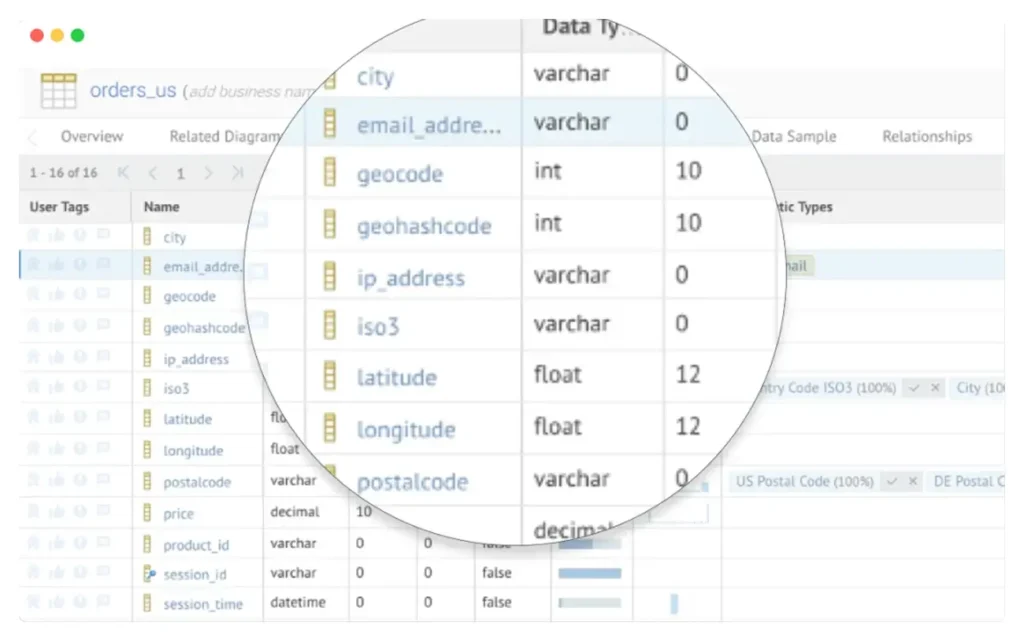

It’s a part of Informatica’s Intelligent Data Management Cloud (similarly to Collibra’s unified platform). And it offers enterprise-grade data quality alongside metadata management and governance capabilities.

Key features:

- Automated data profiling

- Integrated data cleansing and standardization

- Prebuilt AI-powered data quality rules

- Data observability

- Multiple connectors available

Ataccama ONE

Attacama ONE is another comprehensive platform that combines data cataloging, governance, and data quality profiling with an easy-to-use interface that works for both technical and business users. Similarly to Collibra, data quality is one of the modules.

Key features:

- Out-of-the-box data quality validation

- Automated data profiling, anomaly detection, standardization and cleansing

- AI-powered data lineage and reusable data quality rules

- Monitoring, reporting, and reconciliation checks

The new wave: The rise of data observability tools

Observability has become a key supplement for data quality tools – because, instead of reacting to broken dashboards, observability helps you detect, triage, and proactively prevent issues throughout the entire data lifecycle.

Observability tools can convert noisy monitoring into prioritized, actionable incidents – but they’re only as good as the integrations and governance that surround them. Obviously, the tools mentioned above, like Collibra Data Quality and Observability, could also go into this category, as they include observability tools.

And let’s take a look at a couple of others. (Note: the distinction between a data quality tool and a data observability tool might come just from the product positioning, so always check the capabilities and see if a tool offers exactly what you need.)

Monte Carlo

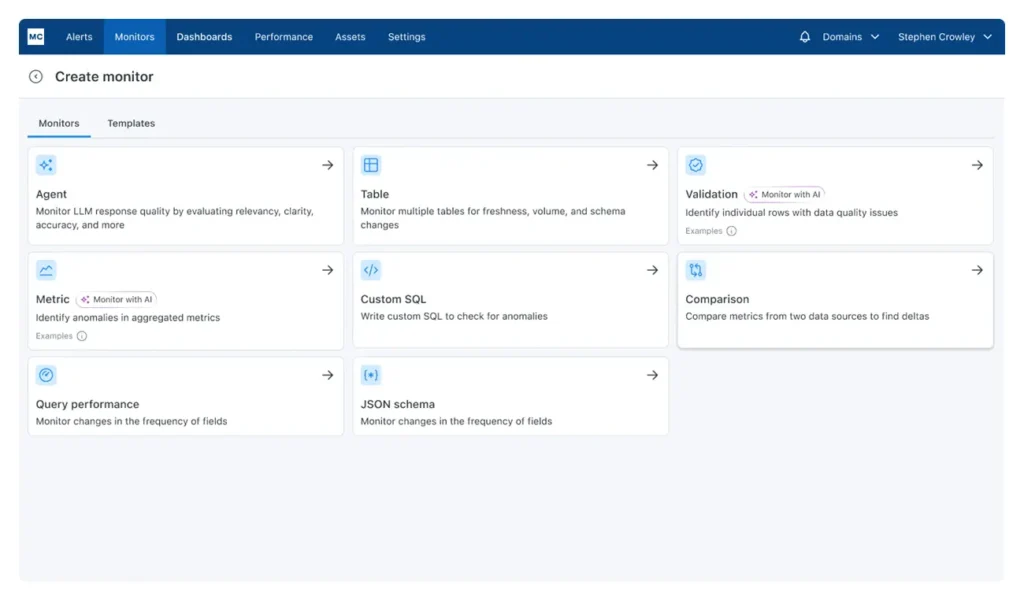

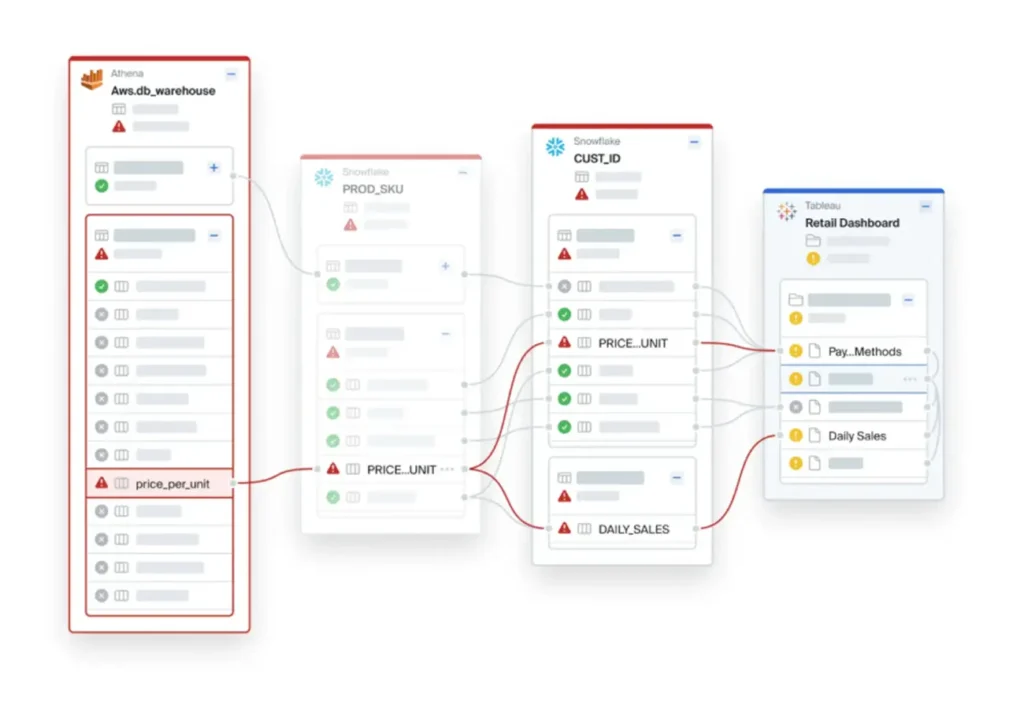

Monte Carlo is focused on data observability across data estates and AI, end-to-end.

Key features:

- Automated profiling and anomaly detection

- AI observability and observability agents

- Visual lineage tracking and impact analysis and root cause analysis

- Integrations with other data tools

Bigeye

Bigeye offers lineage-enabled data observability for complex enterprise environments.

Key features:

- Automated root cause and impact analysis workflows

- Data catalog integrations

- Business-oriented data health summaries

- Real-time alerting

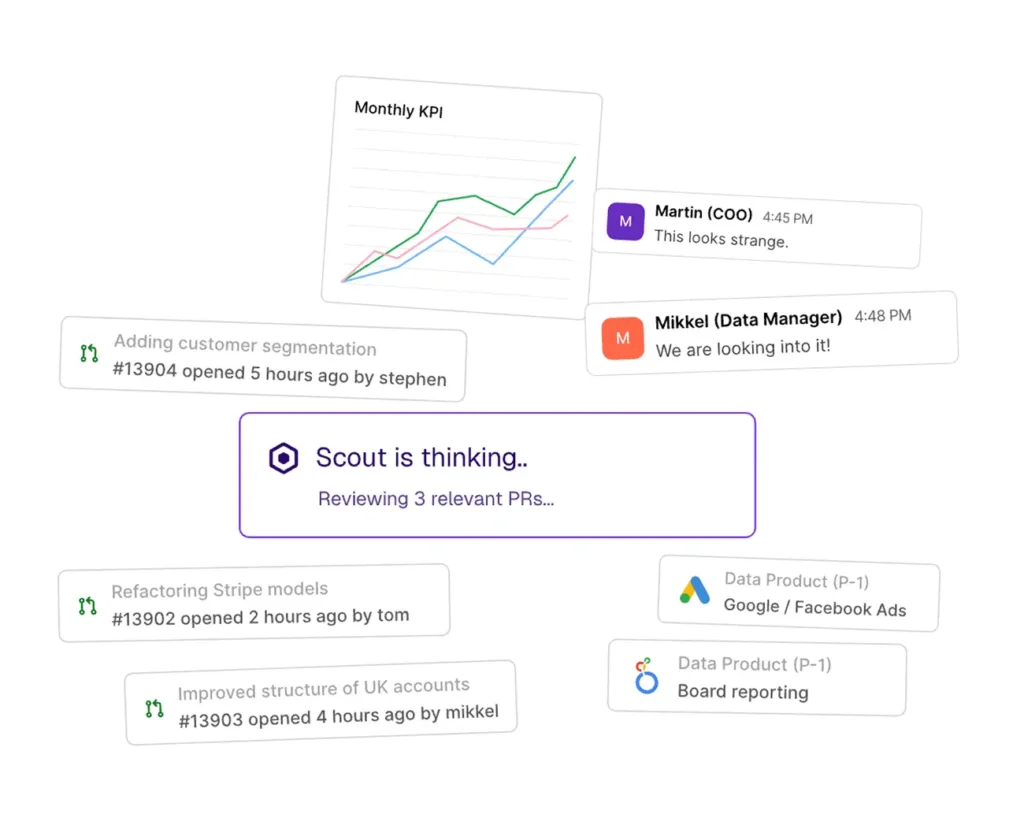

Synq

SYNQ definitely stands out here, if only because of its interface. It’s a data observability platform powered by a data quality AI agent that debugs issues, recommends tests, and resolves problems directly in code. And it’s built around data products.

Key features:

- Testing and anomaly monitoring

- Data ownership and alerting

- Lineage and root cause analysis

- Incident management

The power of community: Open source data quality tools

Open source has become a pragmatic choice for teams that want control, transparency, and cost flexibility. Here are a couple of open-source projects that consistently show up in production pipelines.

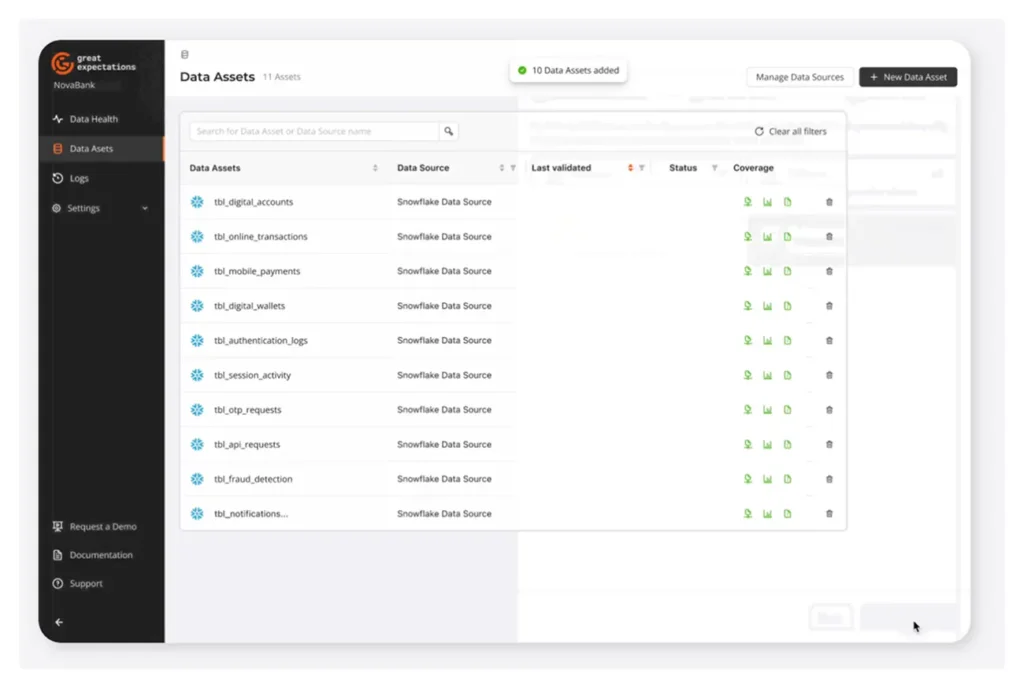

Great Expectations

Great Expectations is an open-source data quality validation tool – and a Python-based, open-source framework that has become popular for managing and automating data validation in modern data pipelines.

Key features:

- Connects to popular data sources like Databricks and Snowflake

- Easy setup with no infrastructure to manage

- Built-in observability and collaboration tools

- Auto-generated tests and real-time alerts

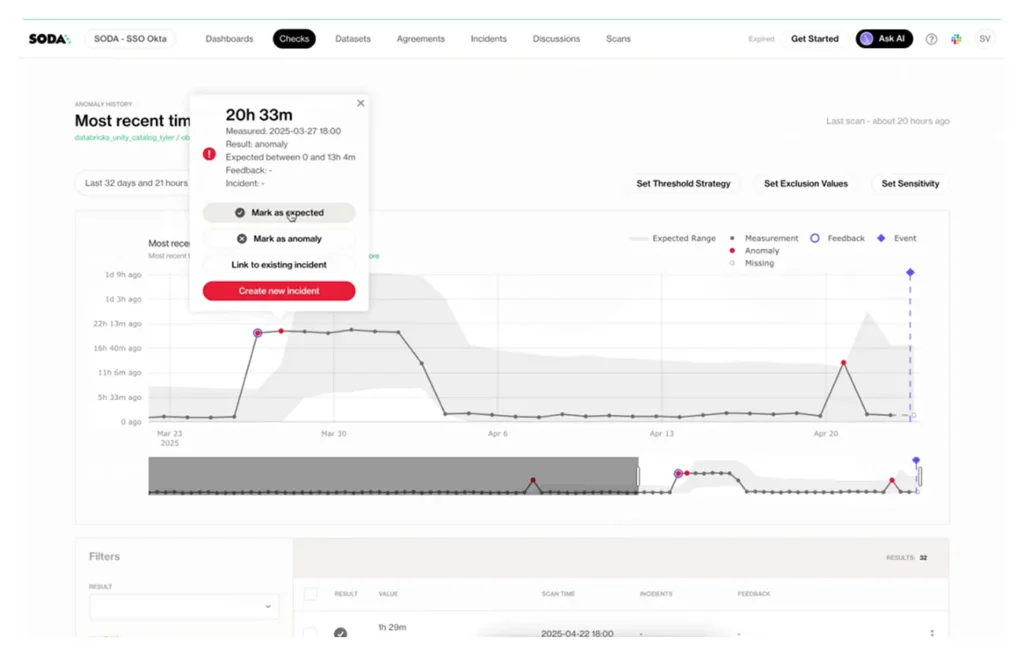

Soda (Soda Core / Soda Cloud)

Soda is another tool that targets simple checks and quick onboarding. Soda’s strength is its ease of use and quick time-to-value for basic checks and monitoring – unlike more comprehensive and advanced enterprise tools.

Key features:

- AI-powered metrics observability

- Pipeline and CD/CI workflow testing

- Collaborative contracts

- Operational data quality

Ecosystem-integrated solutions for different types of data

This is a very broad category, depending on the types of data, and again, the comprehensive enterprise solutions we mentioned at the beginning could also fit here.

There are several other product suites that often make the most sense for companies that already use these vendors’ integration or MDM tools and want to extend into data quality without rebuilding their entire ecosystem.

Here are two more examples:

Talend Data Quality

Offers profiling, standardization, and matching features as part of Talend’s data integration suite, now part of the Qlik ecosystem. Features include profiling, standardization, and matching capabilities that fit neatly into ETL and data integration workflows.

Best for: teams already using Talend or Qlik for data pipelines or analytics, looking to centralize quality and transformation.

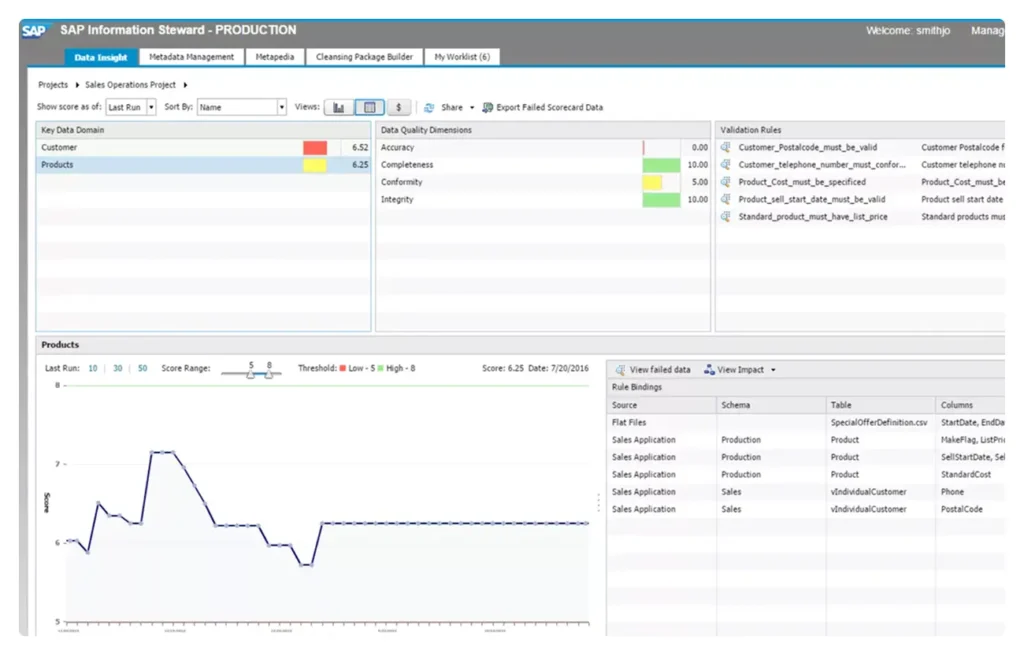

SAP Information Steward

A governance-focused solution that monitors, profiles, and manages data quality across SAP and non-SAP environments. It connects tightly with SAP Data Services and SAP Master Data Governance.

Best for: enterprises managing complex SAP landscapes that need to enforce consistent data standards across systems.

The bottom line

The data quality landscape in 2025 is broad, and the best choice depends less on features alone and more on how a tool fits your existing data environment, maturity, and business goals. Whether you’re embedding data quality checks into data pipelines, scaling governance across domains, or connecting observability to business impact, the right platform should help you see, understand, and act on your data with confidence.

At Murdio, we can help you choose the right tool for your enterprise – and find optimal solutions to your data quality challenges, so reach out, and let’s chat.

And if you’re in for a more detailed read on data quality, here are some more related pieces on our blog:

Data quality vs. data integrity

How to improve data quality in retail

Build a data quality framework for business value

The ultimate guide to data quality assurance

FAQs

- If you need end-to-end governance, go for an enterprise platform.

- If you’re tackling pipeline reliability, consider observability tools.

- If you want cost flexibility and customization, open source might be a good fit.

- And if you’re deeply embedded in a specific vendor ecosystem, native integrations will save you time and complexity.

That depends on a few things. If your governance tool includes integrated data quality and observability (like Collibra or Informatica), extending those modules often brings better alignment and less overhead than deploying a standalone solution.

Yes, especially for specific use cases like validation, monitoring, or testing. Tools like Great Expectations are widely used in production environments. However, they typically require more configuration and custom development compared to enterprise suites.

It depends on your priorities.

If the choice is complex, we’re happy to help based on our extensive experience in data governance and data quality solutions for enterprise clients. Schedule a call with a Murdio expert, and we can talk about your goals and optimal solutions in your specific case.