Traditional data catalogs weren’t designed with machine learning in mind. They’re still valuable for organizing and discovering data, sure. But when it comes to fast-moving, highly collaborative machine learning workflows, they start to show their limits.

A machine learning data catalog is built for the messier, more dynamic reality of modern ML. In this article, we’ll unpack what machine learning data catalogs actually are, why they’ve become so important in 2025, how they’re different from the traditional kind, and what to look for in the best ones.

Why data management is key (and also challenging) for machine learning

Machine learning lives and dies by the quality of its data. You can have the most sophisticated machine learning models in the world, but if the data feeding them is incomplete, inconsistent, outdated, and untrackable, the results will reflect that. And you won’t be able to trust them.

It’s a cliche, but machine learning workflows are inherently complex. Unlike traditional data analytics, machine learning requires massive volumes of data that constantly evolve. Training sets, validation sets, transformed features, model outputs, logs – there’s a lot to track. There’s a lot of collaboration, iteration, and documentation involved, while maintaining compliance and reproducibility. And that’s no small feat to do it all.

Let’s take a quick look at some of the biggest challenges that come to mind immediately for anyone who’s dealt with machine learning model development:

- High data volume and velocity. Machine learning workflows rely on large, constantly changing datasets that undergo multiple transformations during model training, validation, inference, and so on.

- Complex versioning requirements. It’s not just data that needs to be versioned, but also features, model artifacts, and transformation logic.

- Lack of documentation and visibility. Teams often struggle with inconsistent metadata, undocumented features, and unclear data lineage.

- Reproducibility issues. Without robust lineage tracking, reproducing model results or understanding how a model was trained gets really difficult.

- Regulatory and compliance pressure. With increasing emphasis on explainability and data governance, machine learning systems have to be transparent and auditable. We’ll later show you how we helped one of our clients, a global bank, achieve just that.

Machine learning data catalogs address all of these pain points and more by bringing order, automation, and traceability to even the most complex machine learning data ecosystems.

What is a machine learning data catalog?

A machine learning data catalog is a platform that helps organize, document, and manage all the data assets that power machine learning systems – an enormous challenge for today’s data teams. That includes raw datasets, transformed features, versioned training sets, and all the metadata that connects them to the models they support.

If you’ve worked with a traditional data catalog before, the difference (which we’ll expand on a little later) could be described with one word, and that’s context.

Unlike simpler tools like data dictionaries, machine learning data catalogs capture the complex relationships between data assets and machine learning models.

Machine learning data catalogs are built specifically for the management of the machine learning lifecycle. That means they don’t just index tables and columns from various data sources. They track:

- How datasets are used in model training,

- Which features were engineered (and how),

- What data version was deployed in production,

- And how all of it changes over time.

In other words, a machine learning data catalog is like a living map of your machine learning ecosystem.

We’ve seen teams who were deep into building and deploying models but struggling with documentation, version control, and even reproducibility. Usually, what they needed wasn’t just another place to list data assets – because that’s really no help. They needed a system to track how everything fits together.

In our experience, companies often pair data catalog platforms like Collibra with orchestration and model management tools for a tightly integrated feedback loop between data, models, and outcomes.

(And we typically recommend Collibra because it’s a leader in machine learning data catalogs.)

The rise of machine learning data catalogs

Over the last few years, machine learning data catalogs have evolved from niche tools to essential business infrastructure. And there are several reasons for that.

Machine learning isn’t just R&D anymore

In the early days, machine learning was something done by small research teams. A few data scientists experimenting in notebooks, running scripts, testing models – often without much process.

But as both machine learning and AI got more universally used and proved their business value, the stakes got higher. Today, machine learning is powering processes like real-time fraud detection, customer support automation, or predictive maintenance.

That shift from ad hoc projects to mission-critical systems changed a lot in how the data needs to be managed. And it needs to be managed efficiently and carefully – that’s the baseline. You can’t rely on critical processes fueled by data that’s not tracked and producing results you can’t reproduce.

So, suddenly, machine learning teams needed workflows, infrastructure, and tools that could scale. And data management, unsurprisingly, quickly became one of the biggest pain points.

Traditional data catalogs hit a wall

Companies have long relied on traditional data catalogs to keep track of their enterprise data assets. Enterprise data catalogs work well for BI and analytics, where the questions are stable, the pipelines are well-defined, and the data doesn’t change all that often. “Change” and “often” being the key words here.

But machine learning is a different beast, and the standard data catalog benefits are just not enough.

Machine learning pipelines involve constant iteration – new data, new features, new experiments. Data scientists are moving fast, trying out ideas, and testing assumptions. And all of that needs to be tracked. Traditional data catalogs just weren’t built for that level of change and complexity.

Read more: How to build a data catalog.

Imagine teams trying to retrofit traditional catalogs to track machine learning data, only to end up with brittle documentation and frustrated engineers. (We’ve seen cases like this.) With machine learning data catalogs, change is the norm, not the exception.

Governance is now part of the job

There’s also the growing pressure to make machine learning (and data management in general) more explainable, auditable, and accountable.

With new AI regulations rolling out globally, and more on the way, companies are under the spotlight, especially in industries like finance or healthcare (though, no industry is really exempt from regulatory compliance). This means that data stewards need to know exactly what data went into a model, who touched it, how it was processed, and what it influenced is essential, and you can’t skip it.

Machine learning data catalogs make that visibility possible. They help teams stay compliant without slowing down innovation, a common fear when the word “regulation” is coupled with “AI” and “ML”.

Machine learning data catalogs vs. traditional data catalogs

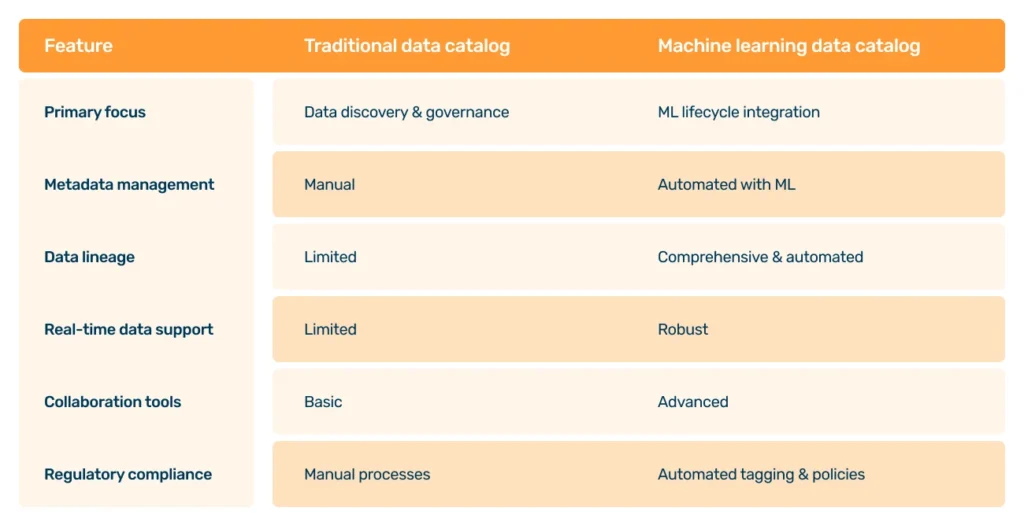

So, how are machine learning data catalogs actually different from their traditional counterparts?

Traditional catalogs are great for static or slowly changing data environments. But machine learning requires a dynamic, end-to-end view of how data moves, transforms, and feeds into real-time decisions.

Here’s a quick comparison:

(We could add a few asterisks here and there, as with the right skills and know-how, data catalogs built using platforms like Collibra can be highly customized and automated, so this is probably a bit of an oversimplification.)

Key machine learning data catalog features

There are some data catalog best practices and features you should keep in mind when implementing a machine learning data catalog. Here’s what to look for.

1. Automated data tagging

Machine learning data catalogs use machine learning algorithms to automatically classify and tag data assets – structured, unstructured, and everything in between. This includes sensitive data like PII and PHI. Automated tagging helps with:

- Consistent data classification across datasets

- Easier data discovery for teams

- Simpler and more reliable compliance workflows

It eliminates the need for tedious manual classification and keeps metadata always accurate and up to date.

2. Comprehensive data profiling

More than just basic metadata, a machine learning data catalog gives you a 360-degree profile of every data asset. This includes:

- Statistical summaries (e.g., missing values, distributions)

- Lineage and transformation history

- Usage metrics and access patterns

- Data quality scores and anomalies

With this level of visibility, it’s much easier to assess whether data is reliable and suitable for training machine learning models.

3. Semantic search capabilities

Modern machine learning data catalogs borrow from consumer-grade search experiences (think Google search). Instead of navigating folders or learning SQL, catalog users can search using plain language. They often include:

- Natural language search support

- Intelligent auto-suggestions

- Filtering by tags, owners, data types, and more

- Result relevance powered by usage context

This way, non-technical stakeholders can search for data more easily, and data discovery is faster for everyone.

4. Automated data lineage mapping

By parsing query logs and tracking data flows and transformations, a machine learning data catalog automatically generates visual representations of data lineage, providing transparency and helping with impact analysis. It can provide:

- End-to-end data lineage, from raw source to model prediction

- Visibility into which models depend on which datasets

- Insight into the transformations and code used in the pipeline

5. Dataset and feature versioning

If you can’t reproduce a model’s results, you can’t trust it. And you definitely can’t audit it. That’s why versioning is such a central feature of machine learning data catalogs. They let you:

- Save and restore versions of datasets and features

- Track how those versions were used in model training

- Compare outputs across different data snapshots

In our experience, Collibra is really helpful used as a central version control system, giving you the option to track version use (including who used it and when). So nobody has to ask, “What data was this trained on again?” – ever again, because you’ve got the full history right there.

6. Quality audits and governance

Data quality and governance are essential for trust in both the data and the models they power. A machine learning data catalog helps automate them using:

- Built-in data quality checks and anomaly detection

- Monitoring of schema changes and data drift

- Centralized management of data access controls and data policies

- Alerts for governance violations or suspicious activity

This will give you the confidence to scale your machine learning initiatives safely and responsibly.

7. Model and pipeline integration

Machine learning goes way beyond data itself – it’s also pipelines, models, experiments, deployments, and the systems that connect them all. Modern machine learning data catalogs integrate with software like:

- Model training tools (like MLflow, SageMaker, or Vertex AI)

- Orchestration frameworks (like Airflow or Kubeflow)

- Deployment platforms (for real-time or batch inference)

All of it creates a unified view across the entire machine learning lifecycle. You can go from a model’s prediction back to the exact data and code that powered it.

8. Collaboration features

Machine learning projects are rarely solo efforts. You’ve got data engineers, scientists, analysts, product owners, and compliance teams all involved in different ways. A good machine learning data catalog supports:

- Shared documentation for datasets and features

- Owner and usage metadata

- Access control and visibility settings

- Tags, labels, and grouping for easy discovery

Why shifting to a machine learning data catalog matters now

In a world where it becomes increasingly challenging to control data quality, machine learning data catalogs help build data management processes that people can trust, allowing teams to move fast without breaking things. That’s because a machine learning data catalog promotes:

- Faster experimentation without losing track of past work

- Stronger governance with full data lineage and versioning

- Better collaboration between technical and non-technical teams

- More reliable deployments by reducing drift and duplication

Of course, a machine learning data catalog is not a silver bullet (no single thing is), but it’s a foundational piece of any mature MLOps architecture.

If you’re still managing machine learning datasets in ad hoc ways or trying to make a general-purpose catalog stretch to fit your needs, it might be time for a change. And we could help.

At Murdio, we spend a lot of time helping teams scale their machine learning systems. And again and again, we see the same pattern: the better you manage your data workflows, the more confidence and speed you unlock across your entire machine learning lifecycle.

A good machine learning data catalog will help you build resilience into your systems, making your models more transparent and helping your team work smarter together.

How we helped a global bank with the lifecycle management of AI/ML models

One of our clients, a leading global financial institution, faced regulatory scrutiny over its fragmented AI and machine learning model management. They came to us because they needed a centralized “golden source” for their machine learning lifecycle.

The information about AI/ML models was scattered across multiple, disconnected systems, each with different formats, varying data models, and incomplete or inconsistent documentation.

They hired some of our experts for the technical expertise they didn’t have enough of in their in-house team. Our Collibra experts helped develop an internal centralized AI Inventory Platform – a Collibra-like system tailored specifically for AI/ML models. It was built with a robust, expandable API that allows other systems to register and retrieve model metadata automatically.

Any new model developed elsewhere in the ecosystem is now automatically registered in the inventory. This way, the platform significantly improved lifecycle management of AI/ML models, enhancing compliance and significantly reducing regulatory risks.

Here’s the full case study if you want to read more about how we did it.

Need help building your machine learning data infrastructure?

We’re here to help. At Murdio, we partner with data teams to design modern, scalable MLOps foundations, from cataloging to deployment and beyond. Reach out, and let’s have a conversation about your company’s data cataloging needs, possibilities, and data catalog pricing.

Share this article

Related Articles

See all-

7 January 2026

| Collibra experts for hireData governance framework. Best practices & examples

-

6 January 2026

| Collibra experts for hireBuilding a data governance strategy

-

6 January 2026

| Collibra experts for hireData observability vs data quality: key differences